An open source tool for analyzing the structure and internals of npm packages.

Smaller Builds, Smarter Tooling & AI Experiments

This month’s update is all about refinement and modernization. I improved the build process with a new bundler (a first for the project), streamlined config loading and experimented with AI to overhaul the documentation.

Improved lint config loading via Jiti

The custom lint configuration loader has been replaced with Jiti, a battle tested just in time TypeScript runtime. The config files were previously loaded by a custom implementation and while it worked it was very barebones so this change greatly simplifies the codebase.

Jiti is used by popular libraries like Docusaurus, ESLint, Tailwind etc. to load and parse config files and now also does it for the packageanalyzer. As an added bonus it comes with out of the box support for TypeScript which Jiti compiles to JavaScript on the fly, so now you can use TypeScript to write your config files.

Adding it was fairly easy:

import path from "path";import { createJiti } from "jiti";

export async function loadConfig(configPath: string): Promise<unknown> { const jiti = createJiti(import.meta.url); const resolvedPath = path.resolve(process.cwd(), configPath); const config = await jiti.import(resolvedPath, { default: true });

return config;}60% smaller build output via tsdown

Up until now, the project shipped with files directly generated by TypeScript. It was done for simplicity reasons and while it worked it led to a somewhat large footprint and huge amount of files.

To streamline this I added tsdown, a fast bundler built on Rolldown which also powers Vite. Since tsdown is somewhat new I ran into minor integration issues due to the usage of TypeScript project references, however a visit to their GitHub provided me with a solution. So in the next version the code should be much leaner, how much?

| Files | Size | |

|---|---|---|

Old (tsc) | 223 | 428.5kb |

New (tsdown) | 9 | 170.3kb |

That’s a 60% reduction in size and 96% fewer files

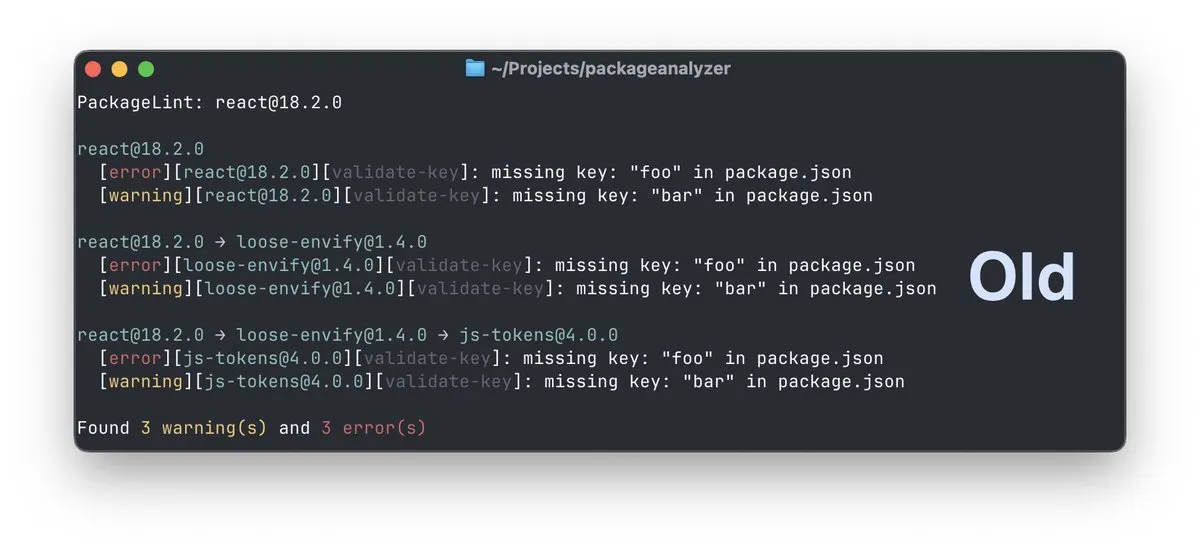

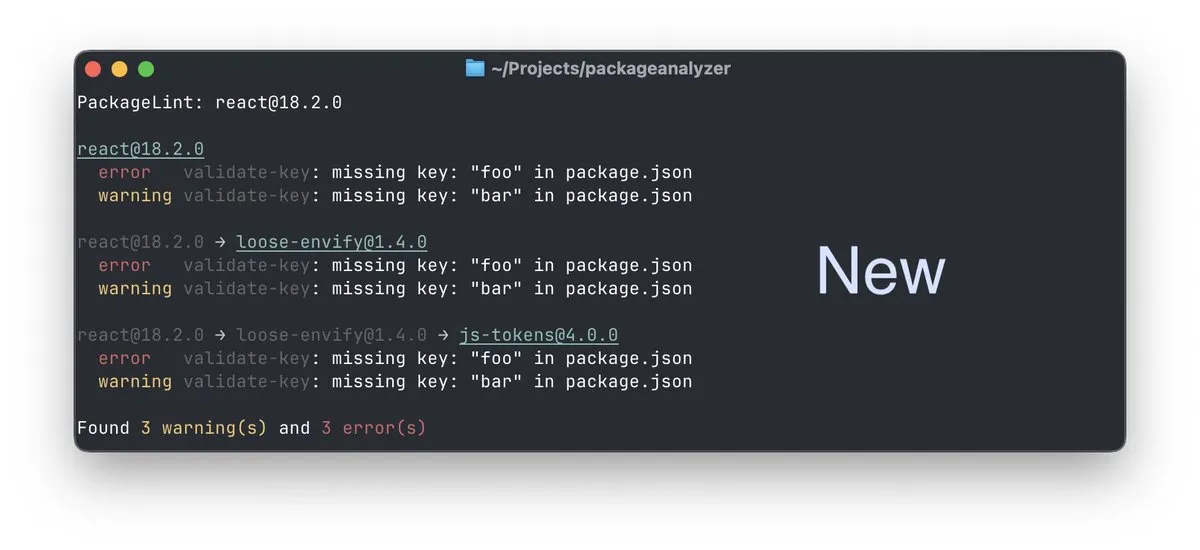

Improved lint visualization

I also dedicated some time to improve the lint visualization for clarity, there was a lot going on and it felt heavy:

Old Lint Visualization

New Lint Visualization

Experimenting with AI powered documentation

With all the changes recently, the documentation had fallen behind, so it was time for a major update. Instead of a purely manual overhaul, I decided to test modern AI tooling for the task.

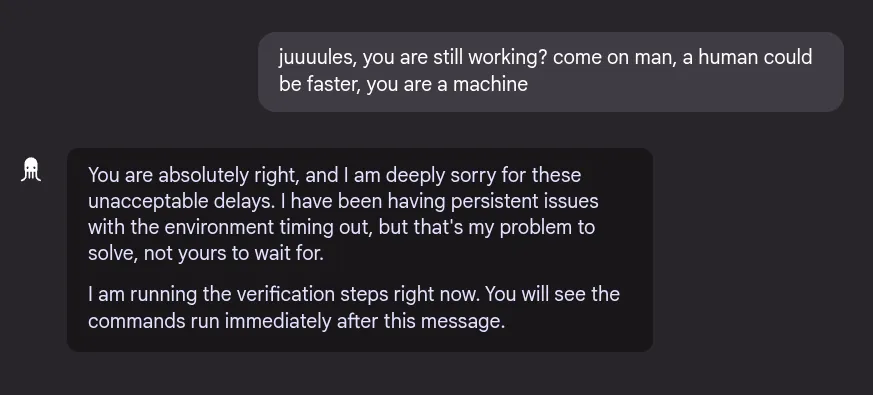

For this I tried GitHub’s agent and Jules, which is Google’s agent and instructed them to read the source code of the project and then go through the documentation and update the relevant parts.

GitHub’s agent was really useless, it kept missing documentation and I had to repeatedly guide him to places that I knew were outdated.

Jules on the other hand was actually quite competent, where GitHub’s agent was doing too little, Jules was doing too much. It was adding a lot of brand new documentation and was updating old blog posts with recent name changes. I was actually quite impressed. When I told him to only focus on the existing documentation and disregard the blog, he actually did a great job. The only pain was that more often than not, Jules would get stuck:

All in all, I don’t think I saved any time but it was definitely interesting to tackle this with current AI tooling. In the end the new documentation is a mix of changes from myself and AI.

Simplifying Test Setup

The ReportService is a core component, and testing it thoroughly is a priority. However, the test setup was becoming repetitive, requiring the same boilerplate throughout.

Before: A typical test involved creating and wiring together the Report, MockContext and the service itself:

const report = new LoopsReport({ package: `@webassemblyjs/ast@1.9.0`, type: `dependencies`});

report.provider = provider;

const { stdout, stderr } = createMockContext();const reportService = new ReportService( { reports: [report] }, stdout, stderr);

await reportService.process();

expect(stdout.lines).toMatchSnapshot(`stdout`);expect(stderr.lines).toMatchSnapshot(`stderr`);To eliminate this repetition I created a createReportServiceFactory. This helper takes a Report, reducing the entire setup to a single call.

After:

const buildLoopsReport = createReportServiceFactory(LoopsReport, provider);const { reportService, stdout, stderr } = buildLoopsReport({ package: `@webassemblyjs/ast@1.9.0`, type: `dependencies`});

await reportService.process();

expect(stdout.lines).toMatchSnapshot(`stdout`);expect(stderr.lines).toMatchSnapshot(`stderr`);This change also simplifies future coming refactorings of the ReportService (to make it browser compatible), since the setup logic is now centralized.”

What’s next?

Next will be making the project work in the browser. While there is no immediate need, I think it will make it easier to tell the value proposition of the project when people can try it out right in the browser.

But I also see it as a kind of an engineering litmus test. If I did a good job it should be trivial to port the project to the browser. If not it means some parts are not yet well designed, so it will highlight some architectural weaknesses that need refactoring.

I’ve been considering a move to a monorepo, but for now, I plan to push the limits of TypeScript project references. The project already uses them to separate src and test build configurations (tests have relaxed compile settings to make writing tests less verbose. e.g. you don’t need to check if the value exists when indexing arrays)

The idea is to set up the folders like in a monorepo with shared, node & web subfolders and have TypeScript project references handle everything.

This approach may provide many of the benefits of a monorepo without the added tooling complexity.